White Papers

Research Papers

Methods, Guides, & Toolkits

Videos

Tech Talk with Kashmir Hill: Your Face Belongs to Us

Kashmir Hill has been working on a book about facial recognition technology for the last three years. In doing so, she tracked down the early pioneers, found the people fighting against the worst impulses for the technology, and dove into the history of Clearview AI, the ground-breaking startup that first drew her into the topic by building a radical person-finding app that giants in the field, including Google and Facebook, had deemed taboo. Full of previously unreported information and scoops, it will leave readers with a greater understanding of how we got to this point and how to prepare for the future to come. Kashmir Hill is a tech reporter at The New York Times and the author of YOUR FACE BELONGS TO US. She writes about the unexpected and sometimes ominous ways technology is changing our lives, particularly when it comes to our privacy. She joined The Times in 2019, after having worked at Gizmodo Media Group, Fusion, Forbes Magazine, and Above the Law. Her writing has appeared in The New Yorker and The Washington Post. She has degrees from Duke University and New York University, where she studied journalism.

Tech Talk with Barry Friedman: Keeping Personal Data Safe From Law Enforcement

Barry Friedman serves as the Faculty Director of the Policing Project at New York University School of Law, where he is the Jacob D. Fuchsberg Professor of Law and Affiliated Professor of Politics. The Policing Project is dedicated to strengthening policing through ordinary democratic processes; it drafts best practices and policies for policing agencies, including on issues of technology and surveillance, assists with transparency, conducts cost-benefit analysis of policing practices, and leads engagement efforts between policing agencies and communities. Friedman has taught, litigated, and written about constitutional law, the federal courts, policing, and criminal procedure for over thirty years. Friedman is the author of Unwarranted: Policing Without Permission (Farrar, Straus and Giroux, February 2017), and has written numerous articles in scholarly journals, including on democratic policing, alternatives to police responses to 911 calls, and the Fourth Amendment. As part of the Tech Policy Lab’s ongoing Tech Talk series, Professor Friedman will visit the University of Washington School of Law to discuss the growing network of police surveillance and the confrontation between constitutional rights and mass government surveillance.

Distinguished Lecture with Danielle Citron | The Fight for Privacy: Protecting Dignity, Identity, and Love in the Digital Age

The Fight for Privacy: Protecting Dignity, Identity, and Love in the Digital Age Privacy is disappearing. From our sex lives to our workout routines, the details of our lives once relegated to pen and paper have joined the slipstream of new technology. When intimate privacy becomes data, corporations know exactly when to flash that ad for a new drug or pregnancy test. Social and political forces know how to manipulate what you think and who you trust, leveraging sensitive secrets and deepfake videos to ruin or silence opponents. And as new technologies invite new violations, people have power over one another like never before, from revenge porn to blackmail, attaching life-altering risks to growing up, dating online, or falling in love. As part of the Tech Policy Lab’s Distinguished Lecture series, author Danielle Citron will visit the University of Washington to discuss her new book, The Fight for Privacy: Protecting Dignity, Identity, and Love in the Digital Age. A masterful new look at privacy in the twenty-first century, The Fight for Privacy takes the focus off Silicon Valley moguls to investigate the price we pay as technology migrates deeper into every aspect of our lives. Danielle Citron is the Jefferson Scholars Foundation Schenck Distinguished Professor in Law and Caddell and Chapman Professor of Law at the University of Virginia, where she writes and teaches about privacy, free expression and civil rights. Her scholarship and advocacy have been recognized nationally and internationally. For the past decade, Citron has worked with lawmakers, law enforcement and tech companies to combat online abuse and to protect intimate privacy. Her first book, Hate Crimes in Cyberspace (Harvard University Press, 2014), was widely praised in published reviews, discussed in blog posts, and named one of the 20 Best Moments for Women in 2014 by the editors of Cosmopolitan magazine. In 2019, Citron was named MacArthur Fellow based on her work on cyberstalking and intimate privacy. Citron is the inaugural director of University of Virginia’s LawTech Center and is an affiliate scholar at the Stanford Center on Internet and Society, Yale Information Society Project, and NYU’s Policing Project.

We Robot 2022 Day 2 Afternoon Session

The Tech Policy Lab hosted We Robot 2022 at the University of Washington School of Law. This video is from the afternoon session of the second day (September 16th).

We Robot 2022 Day 2 Morning Session

The Tech Policy Lab hosted We Robot 2022 at the University of Washington School of Law. This video is from the morning session of the second day (September 16th).

We Robot 2022 Day 1 Afternoon Session

The Tech Policy Lab hosted We Robot 2022 at the University of Washington School of Law. This is the afternoon session of the first day (September 15, 2022).

Lessons Learned in Interdisciplinary Tech & Society Scholarship: A Conversation with Pam Samuelson

The Tech Policy Lab hosted an interdisciplinary panel with Pam Samuelson of the University of California, Berkeley, School of Law. The faculty panel with Professor Samuelson was entitled Lessons Learned in Interdisciplinary Tech and Society Scholarship: A Conversation with Pam Samuelson. The discussion featured a cross disciplinary panel bringing faculty from across the UW campus into dialogue with Pam on the topic of interdisciplinary research in technology and society. Panelists included the Tech Policy Lab’s own Tadayoshi Kohno and Ryan Calo, as well as Mary Fan (Law) and Saadia Pekkanen (International Studies). About Professor Samuelson: Pamela Samuelson is the Richard M. Sherman Distinguished Professor of Law and Information at the University of California, Berkeley. She is recognized as a pioneer in digital copyright law, intellectual property, cyberlaw and information policy. Since 1996, she has held a joint appointment at Berkeley Law School and UC Berkeley’s School of Information. Samuelson is a director of the internationally-renowned Berkeley Center for Law & Technology. She is co-founder and chair of the board of Authors Alliance, a nonprofit organization that promotes the public interest in access to knowledge. She also serves on the board of directors of the Electronic Frontier Foundation, as well as on the advisory boards for the Electronic Privacy Information Center , the Center for Democracy & Technology, Public Knowledge, and the Berkeley Center for New Media.

Tech Talk with Joseph Turow: Voiceprints, Bio-Profiling, and the Future of Freedom

The Tech Policy Lab hosted a Tech Talk with Joseph Turow, the Robert Lewis Shayon Professor of Communication at the Annenberg School for Communication at the University of Pennsylvania. The title of his talk was Voiceprints, Bio-Profiling, and the Future of Freedom: The Rise of the Voice Intelligence Industry. In this talk, Professor Turow looked at the burgeoning voice intelligence industry and historic precedent as to how various forms of information gathering on consumers have grown over time.

Panel Discussion with Neal Stephenson, author of REAMDE

Panel Discussion with Neal Stephenson, author of REAMDE, hosted by the Law, Technology, and Arts program from 2012. TPL Co-Director Ryan Calo hosts and moderates, while TPL Co-Director Yoshi Kohno joins the panel. A prescient conversation that foresees NFTs and the metaverse in 2012.

Distinguished Lecture with Langdon Winner | Technology Innovation and the Malaise of Democracy

2021 Tech Policy Lab Distinguished Lecture "Technological Innovation and the Malaise of Democracy" Decades of enthusiasm for the magic of digital devices has generated a society largely passive as regards democratic participation in the shaping of new technologies that will affect how we live. We’ve learned to accept and celebrate whatever flows from the Silicon Valley pipeline, even when the results undermine personal privacy and concentrate wealth and power in the hands of a scant few. Initiatives in “technology assessment” from earlier times encouraged popular participation and careful reflection upon choices in this realm. Can this approach be revived? Langdon Winner is a political theorist who focuses upon social and political issues that surround modern technological change. Among his writings are Autonomous Technology, a study of the idea of "technology-out-of-control" in modern social thought and The Whale and the Reactor: A Search for Limits in an Age of High Technology. Professor Winner is currently the Thomas Phelan Chair of Humanities and Social Sciences at Rensselaer Polytechnic Institute in upstate New York. In 2020 he was awarded The J.D. Bernal Prize for lifetime achievement in science and technology studies by the Society for Social Studies of Science. His works of satire include “The Masked Marauders” (a send up of 1960s rock super-session albums) and “Introducing the Automatic Professor Machine” (a comic vision of educational technology). Langdon now lives on Maquoit Bay in Maine.

Atlas of AI – A conversation with Kate Crawford and Ryan Calo, Co-hosted by the UW Tech Policy Lab and Center for an Informed Public

On Thursday, May 13th, the University of Washington’s Tech Policy Lab and Center for an Informed Public co-hosted a virtual book talk featuring Kate Crawford, a leading scholar of the social implications of artificial intelligence and author of the recently published book, Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. The book talk was moderated by TPL and CIP co-founder and UW School of Law professor Ryan Calo.

Northwest Science Writers Association Book Event for TPL Telling Stories Book

The Northwest Science Writers Association hosted an event featuring the Tech Policy Lab's Telling Stories Book. Lab Co-Directors Batya Friedman and Ryan Calo, along with featured author Nnnenna Nwakanma, read their respective stories from the book and discussed the inspiration for the book and the individual stories.

Taking Care: Code and Control in a Technological Future | Taeyoon Choi

Taeyoon Choi is an artist, organizer, teacher and cofounder of the School for Poetic Computation, an artist-run school in New York City with a motto of “More Poetry, Less Demo.” In this Distinguished Lecture, Choi considers what care means for a technologically-oriented future. Choi will first share from his experiences of over seven years of teaching and co-organizing the School for Poetic Computation. In 2019, the School initiated satellite programs in Detroit, USA and Yamaguchi, Japan. Choi will discuss the lessons he learned working in and with different cultural and geographical contexts, including a proposal for distributed, peer-to-peer learning. In the second half of the lecture, Choi will lead the audience in a participatory activity based on the Distributed Web of Care, an art project and initiative to “code-to-care” and “code carefully”. This project imagines the future of the internet from a perspective of care, focusing on personhood in relation to accessibility, identity, and the environment, with the intention of creating a distributed future that is built with trust and care, where diverse communities are prioritized and supported.

Hacking Elections: A Conversation with Matt Tait

On November 28th, 2018 the Tech Policy Lab organized a interview with cybersecurity expert and former British intelligence officer Matt Tait on foreign interference with the midterm elections. Tait helped detect Russian interference in the 2016 election and discussed a range of topics, including the meddling in the midterms, the status of the Russia investigation, and what can be done about foreign interference in a digital age. Matt Tait is a senior fellow at the Robert S. Strauss Center for International Security and Law at the University of Texas at Austin where he teaches cyber-security to law and public policy students. Previously Tait worked in private industry as a consultant to US tech companies such as Microsoft and Amazon, and worked in Google’s Project Zero security team. Prior to that, Tait worked on cybersecurity issues at the UK’s GCHQ intelligence agency. Tait can be found on Twitter at @pwnallthethings. Matt Tait was interviewed by Megan Finn, assistant professor at the University of Washington Information School.

AI in Public Sector: Tool for inclusion or exclusion?

Applications of Machine Learning (ML) and Artificial Intelligence (AI) in the public sector are broad and growing, helping make decisions in welfare payments, immigration, fraud detection, healthcare, transportation, etc. However, the social equity and diversity implications of ML & AI in this realm are unclear. The panel will discuss the potential social and economic impact and ethical considerations of ML & AI in the public realm. Moderator: Ed Lazowska, Profess, Paul G. Allen School Panelists: Emily Bender, Professor, UW Linguistics Shankar Narayan, Tech and Liberty Project Director, ACLU of WA Oren Etzioni, CEO, AI2 Emily Keller, Program Manager, Urbanalytics, i School Ryan Calo, Associate Professor, UW Law School Michael Phillips, Associate General Counsel Microsoft

AI Now: Social and Political Questions for Artificial Intelligence | Kate Crawford

On March 6th, Kate Crawford gave the Tech Policy Lab’s Spring Distinguished Lecture on “AI Now: Social and Political Questions for Artificial Intelligence.” The impact of early AI systems is already being felt, bringing with it challenges and opportunities, and laying the foundation on which future advances in AI will be integrated into social and political domains. The potential wide-ranging impact makes it necessary to look carefully at the ways in which these technologies are being applied now, whom they’re benefiting, and how they’re structuring our social, economic, and interpersonal lives. Kate Crawford is the co-founder (with Meredith Whittaker) of the AI Now Institute, a New York-based research center working across disciplines to understand the social and economic implications of artificial intelligence. She is a principal researcher at Microsoft Research New York City, a visiting professor at MIT’s Center for Civic Media, and a senior fellow at NYU’s Information Law Institute. Her research addresses the social implications of large scale data, machine learning and AI. Recent publications address the topics of data discrimination, social impacts of artificial intelligence, predictive analytics and due process, ethical review for data science, and algorithmic accountability.

Poison Arrows and Other ‘Killer Apps’: A Hunter-Gatherer Perspective on Tech and our Future | James Suzman

In November 2017, James Suzman joined the Tech Policy Lab to give our fall Distinguished Lecture on “Poison Arrows and Other ‘Killer Apps’: A Hunter-Gatherer Perspective on Tech and our Future.” Dr. Suzman discussed what we might learn from a better understanding of hunter-gatherers about technology and sustainability. The success of a civilization can be measured by its longevity. In that light, Southern Africa’s hunting and gathering San (“Bushmen”) are the most enduring (and successful) civilization in the history of modern Homo sapiens. What tools do the San use and how has their tool use contributed to such longevity? What of modern society? Despite technological advancements that have enabled levels of productivity unimaginable a hundred years ago, the modern world faces broad sustainability challenges. How might a better understanding of sustainable hunter-gatherer societies like the San help us respond to the social and economic impacts of modern technology, including challenges from increasing automation and computerization? How might such understanding help us to meet broader sustainability challenges? With a head full of Laurens van der Post and half an anthropology degree from St Andrews University under his belt, James Suzman hitched a ride into Botswana’s eastern Kalahari in June 1991. He has been living and working with Kalahari peoples ever since. Dr. Suzman holds a Ph.D. in social anthropology from Edinburgh University which he was awarded in 1996. Since then he has lived and worked with every major Bushman group in southern Africa, from the war ravaged Vasakele !Kung of southern Angola during the final phases of that civil war, to the highly marginalized Hai//om of Namibia’s Etosha National Park. Dr. Suzman is the Author of Affluence without Abundance: The Disappearing World of the Bushmen, published by Bloomsbury in 2017.

CHI 2017: Toys That Listen

The Lab’s research paper Toys That Listen: A Study of Parents, Children, and Internet-Connected Toys was presented at CHI ’17: ACM CHI Conference on Human Factors in Computing Systems during the Emerging Privacy session.

My Politics as a Technologist | Terry Winograd and Alan Borning

On November 30, 2016 the Tech Policy Lab organized a discussion at the intersection of personal politics and technical expertise with Terry Winograd and Alan Borning. Professor Terry Winograd is a leader in human-computer interaction and the design of technologies for development. Professor Winograd advised the creators of Google and was a founding member of Computer Professionals for Social Responsibility. Professor Emeritus Alan Borning is an expert in programming languages and human computer interaction. Professor Borning pioneered information systems for civic engagement, among them OneBusAway, a set of digital tools that provide real-time transit information, UrbanSim (think SimCity for real) and the Living Voters Guide, an experiment in social media for an informed electorate.

Artificial Intelligence: Law and Policy

Deterrence in the 21st Century: From Nuclear, To Space, To Cyberspace | General Kevin Chilton

General Chilton served 34 1/2 years in the US Air Force in various flying and staff positions and retired in 2011 as the Commander of U.S. Strategic Command, responsible for the plans and operations of all U.S. forces conducting strategic deterrence and DoD space and cyberspace operations. Prior to his work in Strategic Command, General Chilton commanded Air Force Space Command. During part of his Air Force career he served with NASA and was a Command Astronaut Pilot and flew 3 Space Shuttle missions. General Chilton has a BS in engineering from the USAF Academy, a Masters in Mechanical Engineering from Columbia University and an honorary Doctor of Laws degree from Creighton University.

How Technology Impacts Humans | Latanya Sweeney

Technology designers are new policy makers. No one elected them and most people do not know their names, but the arbitrary decisions they make when producing the latest gadgets and online innovations dictate the code by which we conduct our daily lives and govern our country. As technology progresses, every democratic value and every law comes up for grabs and will likely be redefined by what technology enables or not. Privacy and security were just the first wave. In this talk, let’s see how it all fits together or falls apart. As a professor at Harvard University, Latanya Sweeney creates and uses technology to assess and solve societal, political and governance problems, and teaches others how to do the same. One focus area is the scientific study of technology’s impact on humankind, and she is the Editor-in-Chief of the newly formed journal Technology Science. She was formerly the Chief Technology Officer at the Federal Trade Commission, an elected fellow of the American College of Medical Informatics, with almost 100 academic publications, 3 patents, explicit citations in 2 government regulations, and founded 3 company spin-offs. She has received numerous professional and academic awards, and testified before federal and international government bodies. Professor Sweeney earned her PhD in computer science from the Massachusetts Institute of Technology, being the first black woman to do so. Her undergraduate degree in computer science was completed at Harvard University. latanyasweeney.org.

What is Administrative Law?

What is a Robot?

In our research, we found 3 broad approaches that scholars use to define robots. These are (1) as artificial humans, (2) programmable machines, and (3) machines that can sense, think, and act on the world. None of these approaches offers a definition that works for all of the ways we already use the word. But they're a start.

What is Machine Learning?

What is an Algorithm?

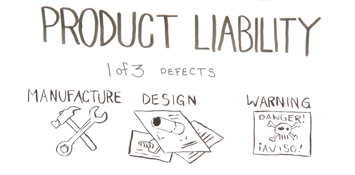

What is Product Liability?

Product liability is the area of law in which consumers can bring claims gainst manufacturers and sellers for products that injure people. To sue for product liability, you only need to thos 1 of 3 things to hold the manufacturer liable.

What is a Bot?

Bots are automated software, designed by human programmers to do tasks on the web. Bots have been around since the beinning of the internet. As a recent New York Times article says, bots are getting smarter and easier to create. As bots become more convincing, users and researchers need to be careful what we are being convinced of.

Computer Security and the Internet of Things – Faculty Co-Director Tadayoshi Kohno presents at Usenix Enigma 2016

Tech Policy Lab Faculty Co-Director Kohno's talk explored case studies in the design and analysis of computer systems for several types of everyday objects, including wireless medical devices, children's toys, and automobiles. He discussed the discovery of security risks with leading examples of these technologies, the challenges to securing these technologies and the ecosystem leading to their vulnerabilities, and new directions for security and privacy. Including efforts (in collaboration with UC San Diego) to compromise the computers in an automobile from a thousand miles away, and the implications and consequences of this and other works. He also outlined directions for mitigating computer security and privacy risks, including both technical directions and education.

Computers, Privacy, and Data Protection 2016: Panel on Toys That Listen

This panel on Toys That Listen, organized by the Tech Policy Lab, brought together an interdisciplinary group of experts to discuss best practices for privacy, consumer protection, and user control regarding connected devices in the home.

FTC Start with Security | Panel 2: Integrating Security into the Development Pipeline

FTC Start With Security | Avoiding Catastrophe: An Introduction to OWASP Proactive Controls

FTC Start With Security | Panel 3: The Business Case for Security

FTC Start with Security | Panel 1: Building a Security Culture

FTC Start With Security | Panel 4: Securing the Internet of Things

Connected devices present new security challenges and expanded attack surfaces. How can startups secure their IoT products and services in a rapidly developing ecosystem? This panel will address how IoT startups can identify and manage critical risks in their businesses and plan for the unique challenges they face.

Responsible innovation: A cross-disciplinary lens on privacy and security challenges

The Tech Policy Lab's Faculty Co-Directors were the featured speakers for November's installment of the 2015 Engineering Lecture Series where they discussed what it means to innovate responsibly, particularly with respect to privacy and security. How do you interact and socialize? How do you conduct business? How do you raise your kids and care for the elderly? All these basic activities are being directly impacted by new technologies that are emerging at an incredible rate. While each new technology brings its own benefits and risks, regulations struggle to catch up.

WeRobot 2015 Keynote: An Evening with Tony Dyson

WeRobot 2015 Panel 2: “Robot Passports”

Author: Anupam Chander; Discussant: Ann Bartow; Paper: http://bit.ly/1QBX2fp. Can international trade law, which after all seeks to liberalize trade in both goods and services, help stave off attempts to erect border barriers to this new type of trade? The smart objects of the 21st century consist of both goods and information services, and thus are subject to multiple means of government protectionism, but also trade liberalization. This paper is the first effort to locate and analyze the Internet of Things and modern robotics within the international trade framework.

WeRobot 2015 Panel 8: “Operator Signatures for Teleoperated Robots”

Authors: Tamara Bonaci, Aaron Alva, Jeffrey Herron, Ryan Calo, Howard Chizeck; Discussant: Margot Kaminski; Paper: http://bit.ly/1GBw1Wz. This paper discusses legal liability and evidentiary issues that operator signatures could occasion or help to resolve. We first provide a background of teleoperated robotic systems, and introduce the concept of operator signatures. We then discuss some cyber-security risks that may arise during teleoperated procedures, and describe the three main task operator signatures seek to address—identification, authentication, and real-time monitoring. Third, we discuss legal issues that arise for each of these tasks. We discuss what legal problems operator signatures help mitigate. We then focus on liability concerns that may arise when operator signatures are used as a part of a real-time monitoring and alert tool. We consider the various scenarios where actions are conducted on the basis of an operator signature alert. Finally, we provide preliminary guidance on how to balance the need to mitigate cyber-security risks with the desire to enable adoption of teleoperation.

WeRobot 2015 Panel 7: “The Presentation of the Machine in Everyday Life”

Authors: Karen Levy & Tim Hwang; Discussant: Evan Selinger; Paper: http://bit.ly/1bMxso7. As policy concerns around intelligent and autonomous systems come to focus increasingly on transparency and usability, the time is ripe for an inquiry into the theater of autonomous systems. When do (and when should) law and policy explicitly regulate the optics of autonomous systems (for instance, requiring electric vehicle engines to “rev” audibly for safety reasons) as opposed to their actual capabilities? What are the benefits and dangers of doing so? What economic and social pressures compel a focus on system theater, and what are the ethical and policy implications of such a focus?

WeRobot 2015 Panel 1: “Who’s Johnny? (Anthropomorphizing Robots)”

Author: Kate Darling; Discussant: Ken Goldberg; Paper: http://bit.ly/1bxvbfR. As we increasingly create spaces where robotic technology interacts with humans, our tendency to project lifelike qualities onto robots raises questions around use and policy. Based on a human-robot-interaction experiment conducted in our lab, this paper explores the effects of anthropomorphic framing in the introduction of robotic technology. It discusses concerns about anthropomorphism in certain contexts, but argues that there are also cases where encouraging anthropomorphism is desirable. Because people respond to framing, framing could serve as a tool to separate these cases.

WeRobot 2015 Panel 9: “Robot Economics”

WeRobot 2015 Panel 6: “Unfair and Deceptive Robots”

Author: Woodrow Hartzog; Discussant: Ryan Calo; Paper: http://bit.ly/1KoSy7E. What should consumer protection rules for robots look like? The FTC’s grant of authority and existing jurisprudence make it the preferable regulatory agency for protecting consumers who buy and interact with robots. The FTC has proven to be a capable regulator of communications, organizational procedures, and design, which are the three crucial concepts for safe consumer robots. Additionally, the structure and history of the FTC shows that the agency is capable of fostering new technologies as it did with the Internet. The agency defers to industry standards, avoids dramatic regulatory lurches, and cooperates with other agencies. Consumer robotics is an expansive field with great potential. A light but steady response by the FTC will allow the consumer robotics industry to thrive while preserving consumer trust and keeping consumers safe from harm.

WeRobot 2015 Panel 10: “Personal Responsibility and Neuroprosthetics”

Authors: Patrick Moore, Timothy Brown, Jeffrey Herron, Margaret Thompson, Tamara Bonaci, Sara Goering, Howard Chizeck; Discussant: Meg Leta Jones; Paper: http://bit.ly/1J4Mtfp. This paper investigates whether giving users volitional control over therapeutic brain implants is ethically and legally permissible. We believe that it is not only permissible—it is in fact advantageous when compared to the alternative of making such systems’ operation entirely automatic. From an ethical perspective, volitional control maintains the integrity of the self by allowing the user to view the technology as restoring, preserving, or enhancing one’s abilities without the fear of losing control over one’s own humanity. This preservation of self- integrity carries into the legal realm, where giving users control of the system keeps responsibility for the consequences of its use in human hands.

WeRobot 2015 Panel 3: “Robotics Governance”

Panelists: Peter Asaro, Jason Millar, Kristen Thomasen, & David Post. Asaro, “Regulating Robots: A Multi-Scale Approach to Developing Robot Policy and Technology” http://bit.ly/1H1fZE8; Millar, “Sketching an Ethics Evaluation Tool for Robot Design and Governance” http://bit.ly/1Fsqro4; Thomasen, “Driving Lessons: Learning from the History of Automobile Regulation to Legislate Better Drones” http://bit.ly/1DQQrnx.

WeRobot 2015 Panel 5: “Law and Ethics of Telepresence Robots”

Authors: J. Nathan Matias, Chelsea Barabas, Chris Bavitz, Cecillia Xie, & Jack Xu; Discussant: Laurel Riek; Paper: http://bit.ly/1KoSy7n. The deployment of telepresence robots creates enormous possibilities for enhanced long-distance interactions, educational opportunities, and bridging of social and cultural gaps. The use of telepresence robots raises some legal and ethical issues, however. This proposal outlines the development of a law and ethics toolkit directed to those who operate and allow others to operate telepresence robots, describing some of the potential legal ethical issues that arise from their use and offering proposed responses and means of addressing and allocating risk.

WeRobot 2015 Panel 4: “Regulating Healthcare Robots”

Authors: Drew Simshaw, Nicolas Terry, Kris Hauser, M.L. Cumming; Discussant: Cindy Jacobs; Paper: http://bit.ly/1Csn4s0. There are basic, pressing issues that need to be addressed in the nearer future in order to ensure that robots are able to maintain sustainable innovation with the confidence of providers, patients, consumers, and investors. We will only be able to maximize the potential of robots in healthcare through responsible design, deployment, and use, which must include taking into consideration potential issues that could, if overlooked, manifest themselves in ways that harm patients and consumers, diminish the trust of key stakeholders of robots in healthcare, and stifle long-term innovation by resulting in overly restrictive reactionary regulation. In this paper, we focus on the issues of patient and user safety, security, and privacy, and specifically the effect of medical device regulation and data protection laws on robots in healthcare.

Responsible Innovation in the Age of Robots and Smart Machines | Jeroen van den Hoven

Many of the things we do to each other in the 21st century –both good and bad – we do by means of smart technology. Drones, robots, cars, and computers are a case in point. Military drones can help protect vulnerable, displaced civilians; at the same time, drones that do so without clear accountability give rise to serious moral questions when unintended deaths and harms occur. More generally, the social benefits of our smart machines are manifold; the potential drawbacks and moral quandaries extremely challenging. In this talk, I take up the question of responsible innovation drawing on the European Union experience and reconsidering the relations between ethics and design. I shall introduce ‘Value Sensitive Design’, one the most promising approaches, and provide illustrations from robotics, AI and drone technology to show how moral values can be used as requirements in technical design. By doing so we may overcome problems of moral overload and conflicting values by design. Jeroen van den Hoven is full professor of Ethics and Technology at Delft University of Technology, he is editor in chief of Ethics and Information Technology. He was the first scientific director of 3TU.Ethics (2007-2013). He won the World Technology Award for Ethics in 2009 and the IFIP prize for ICT and Society also in 2009 for his work in Ethics and ICT.

Cory Doctorow: Alice, Bob and Clapper: What Snowden Taught us About Privacy

The Center for Digital Arts and Experimental Media, the Henry Art Gallery, and the UW Tech Policy Lab presented a lecture with author and activist Cory Doctorow. It's the 21st century and the Internet is the nervous system of the information age. Treating it as a platform for jihad recruitment that incidentally does some ecommerce and video on demand around the edges is blinkered, depraved indifference. The news that the world's spies have been industriously converting every wire, fiber and chip into part of a surveillance apparatus actually pales in comparison to the news that the NSA spends $250,000,000 every year to undermine the security of the devices we trust our lives to — literally. Can technology give us privacy, or only take it away? Are we headed for Orwell's future? Huxley's? Kafka's? Do we have to choose, or do we get all three (if we're not careful)?